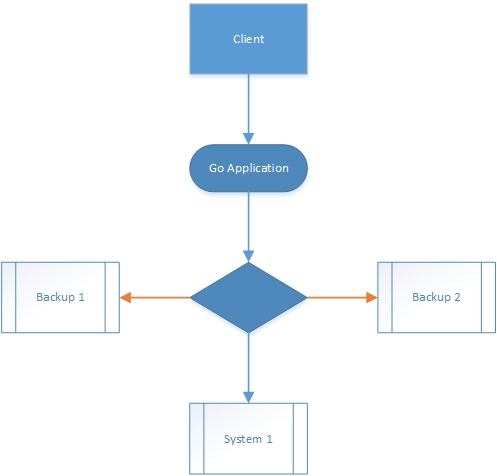

Microsoft Azure provides offers Azure Service Bus as a means of leveraging the Decoupled Middleware design pattern, among other things in your application. This post outlines a step-by-step guide to implementation, assuming that you have already established an Azure account, and have initialised an associated Service Bus.

Start with the Abstraction

This library abstracts the concept of a Service Bus to a level that is not restricted to MS Azure alone. Both ServiceBus and ServiceBusAdapter classes offer any Service Bus implementation the means to establish associated implementations in this library. Having said that, this library explicitly implements concrete classes that are specific to MS Azure Service Bus.

The MS Azure Service Bus

The MSAzureServiceBus class provides a succinct means of interfacing with an MS Azure Service Bus, consuming messages as they arrive. Upon initialisation, MSAzureServiceBus requires that a delegate be established that determines appropriate behaviour to invoke in the event of inbound new messages. Very simply, this functionality is exposed as follows:

Incoming Message-handling

public override event EventHandler<MessageReceivedEventArgs<BrokeredMessage>> MessageReceived;

private void OnMessageReceived(MessageReceivedEventArgs<BrokeredMessage> e) {

var handler = MessageReceived;

if (handler != null) handler(this, e);

}

Duplicate Message-handling

Similarly, behaviour applying to duplicate messages, that is, messages that have already been processed by MSAzureServiceBus, can also be established:

public override event EventHandler<MessageReceivedEventArgs<BrokeredMessage>> DuplicateMessageReceived;

private void OnDuplicateMessageReceived(MessageReceivedEventArgs<BrokeredMessage> e) {

var handler = DuplicateMessageReceived;

if (handler != null) handler(this, e);

}

Receiving Messages Explicitly

Bootstrapping delegates aside, MSAzureServiceBus provides a method designed to retrieve the next available message from the MS Service Bus. This method may be invoked on demand, or as part of a continuous loop, polling the MS Service Bus and consuming new messages immediately after they become available.

protected override void ReceiveNextMessage(string publisherName, TimeSpan timeout, bool autoAcknowledge) {

var message = serviceBusAdapter.ReceiveNextMessage(publisherName, timeout, autoAcknowledge);

if (message == null) return;

var isValidMessage = messageValidator.ValidateMessageId(message.MessageId);

if (isValidMessage) {

messageValidator.AddMessageIdToCache(message.MessageId);

OnMessageReceived(new BrokeredMessageReceivedEventArgs(message));

}

else {

OnMessageReceived(new BrokeredMessageReceivedEventArgs(message));

}

}

The MS Azure ServiceBus Adapter

The MSAzureServiceBusAdapter class is a Bridge that encapsulate the underlying mechanisms required to establish a connection to, send, and receive messages to and from MS Azure Service Bus. Let’s consider the functionality in that order:

Initialising a Connection

Firstly, we must establish a NamespaceManager based on an appropriate connection-string associated with out MS Azure Service Bus account:

var connectionString = CloudConfigurationManager.GetSetting("Microsoft.ServiceBus.ConnectionString");

_namespaceManager = NamespaceManager.CreateFromConnectionString(connectionString);

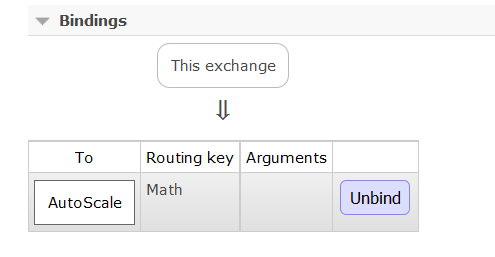

Now we return a reference to a desired Topic, creating the Topic if it does not already exist:

_topic = !_namespaceManager.TopicExists(topicName) ?

_namespaceManager.CreateTopic(topicName) : _namespaceManager.GetTopic(topicName);

Lastly, we create a Subscription to the Topic, if one does not already exist:

if (!_namespaceManager.SubscriptionExists(_topic.Path, subscriptionName))

_namespaceManager.CreateSubscription(_topic.Path, subscriptionName);

The Complete Listing

public override void Initialise(string topicName) {

var connectionString = CloudConfigurationManager.GetSetting("Microsoft.ServiceBus.ConnectionString");

_namespaceManager = NamespaceManager.CreateFromConnectionString(connectionString);

_topic = !_namespaceManager.TopicExists(topicName) ?

_namespaceManager.CreateTopic(topicName) : _namespaceManager.GetTopic(topicName);

if (!_namespaceManager.SubscriptionExists(_topic.Path, subscriptionName))

_namespaceManager.CreateSubscription(_topic.Path, subscriptionName);

_isInitialised = true;

}

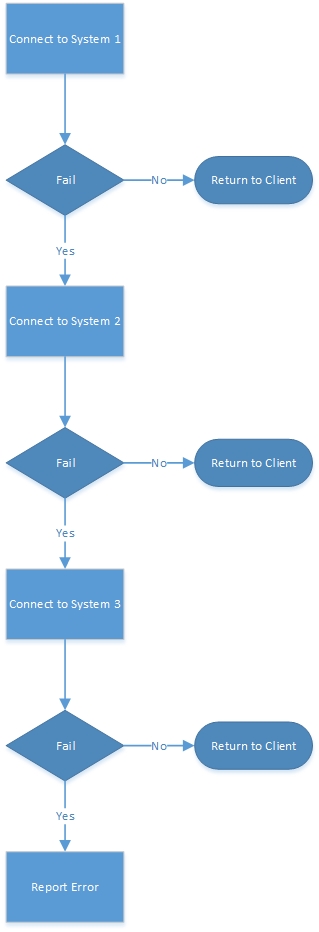

It’s worth noting that all methods pertaining to MSAzureServiceBusAdapter will implicitly invoke the Initialise method if a connection to MS Azure Service Bus has not already been established.

Sending Messages

This library offers the means to send messages in the form of BrokeredMessage objects to MS Azure Service Bus. Firstly, we must establish a connection, if one does not already exist:

if (!_isInitialised) Initialise(topicName);

Finally, initialise a SubscriptionClient, if one has not already been established, and simply send the message as-is, in BrokeredMessage-format:

if (_topicClient == null)

_topicClient = TopicClient.Create(topicName);

_topicClient.Send(message);

The Complete Listing

public override void SendMessage(string topicName, BrokeredMessage message) {

if (!_isInitialised) Initialise(topicName);

if (_topicClient == null)

_topicClient = TopicClient.Create(topicName);

_topicClient.Send(message);

}

Receiving Messages

Messages are consumed from MS Azure Service Bus in a serial manner, one after the other. Once again, we must initially establish a connection, if one does not already exist:

if (!_isInitialised)

Initialise(topicName);

Next, we initialise a SubscriptionClient, if one has not already been established, and define a BrokeredMessage instance, the desired method return-type:

if (_subscriptionClient == null)

_subscriptionClient = SubscriptionClient.Create(topicName, subscriptionName);

BrokeredMessage message = null;

Next, we return the next available message, or null, if there are no available messages:

message = _subscriptionClient.Receive(timeout);

if (message == null)

return null;

Note that this method defines an “autoAcknowledge” parameter. If true, we must explicitly acknowledge the consumption of the message:

if (!autoAcknowledge) return message;

message.Complete();

Finally, we return or abandon the message, depending on whether or not an Exception occurred:

catch (Exception) {

if (message != null) message.Abandon();

throw;

}

return message;

The Complete Listing

public override BrokeredMessage ReceiveNextMessage(string topicName, TimeSpan timeout, bool autoAcknowledge = false) {

if (!_isInitialised)

Initialise(topicName);

if (_subscriptionClient == null)

_subscriptionClient = SubscriptionClient.Create(topicName, subscriptionName);

BrokeredMessage message = null;

try {

message = _subscriptionClient.Receive(timeout);

if (message == null)

return null;

if (!autoAcknowledge) return message;

message.Complete();

}

catch (Exception) {

if (message != null) message.Abandon();

throw;

}

return message;

}

A Practical Example

Let’s build a small Console Application to demonstrate the concept. Our application will interface with MS Azure Service Bus and continuously poll for messages until the application terminates:

var serviceBus = new MSAzureServiceBus(new MSAzureServiceBusAdapter(), new MessageValidator());

serviceBus.MessageReceived += serviceBus_MessageReceived;

private static void serviceBus_MessageReceived(object sender, MessageReceivedEventArgs<BrokeredMessage> e) {

Console.WriteLine(e.Message.MessageId);

}

Message Validation

Notice the MessageValidator instance in the above code snippet. Let’s pause for a moment and consider the mechanics.

Messages contain message identifiers in GUID format. Our application retains an index that maps these identities. Incoming messages are validated by comparing the incoming message ID to those IDs stored within the index. If a match is found, the message is determined to be a duplicate, and appropriate action can be taken.

Here we can see that our inbound message IDs are stored in a simple HashSet of type String. Incidentally, we leverage a HashSet here to achieve what is known as constant complexity in terms of time. Essentially, the time taken to perform a lookup will remain constant (external factors such as garbage collection aside) regardless of HashSet size:

private readonly HashSet<string> _cache = new HashSet<string>();

public IEnumerable<string> Cache { get { return _cache; } }

Newly added messages are formatted to remove all hyphens, if any exist, so that the same standard is applied to message IDs, regardless of format:

public void AddMessageIdToCache(string messageId) {

_cache.Add(messageId.Replace('-', '\0'));

}

public bool ValidateMessageId(string messageId) {

return _cache.Contains(messageId);

}

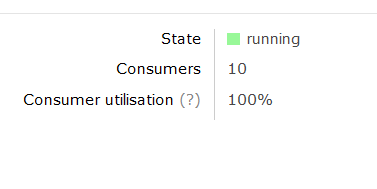

Once initialised, the application will continuously poll MS Azure Service Bus until the return key is pressed:

serviceBus.StartListening("TestTopic", new TimeSpan(0, 0, 1), true);

Console.WriteLine("Listening to the Service Bus. Press any key to quit...");

Console.ReadLine();

serviceBus.StopListening();

Console.WriteLine("Disconnecting...");

The Complete Listing

internal class Program {

private static void Main(string[] args) {

var serviceBus = new MSAzureServiceBus(new MSAzureServiceBusAdapter(), new MessageValidator());

serviceBus.MessageReceived += serviceBus_MessageReceived;

serviceBus.StartListening("TestTopic", new TimeSpan(0, 0, 1), true);

Console.WriteLine("Listening to the Service Bus. Press any key to quit...");

Console.ReadLine();

serviceBus.StopListening();

Console.WriteLine("Disconnecting...");

}

private static void serviceBus_MessageReceived(object sender, MessageReceivedEventArgs<BrokeredMessage> e) {

Console.WriteLine(e.Message.MessageId);

}

}

Simply add a new message to your MS Azure Service Bus instance. The application will consume the message and display the message ID on-screen.

Connect with me: